Help us protect the commons. Make a tax deductible gift to fund our work. Donate today!

What does it mean to exhibit prosocial behavior? For our purposes, we mean behavior that leads to healthy collaboration and meaningful interactions online. Specifically, we are interested in prosocial behavior around online content sharing. How can we help people make the human connections that make sharing meaningful?

In this post, we distill learnings from the “How to encourage prosocial behavior” session at the recent CC Global Summit in Toronto, and outline the next steps for CC in this space. Specifically, Creative Commons will launch an investigative series into the values and behaviors that make online collaboration thrive, in order to embed them more deeply into the experience of sharing with CC.

Read on for all of the glorious details, or skip to the end for next steps.

Creative Commons: Remix by Creative Commons / CC BY-SA

When we launched our new strategy in 2016, we focused our work with user-generated content platforms on increasing discovery and reporting of CC-licensed works. Since the majority of the 1.2 billion CC-licensed works on the web are hosted by third parties, re-establishing relationships with them (e.g. Flickr, Wikipedia) was important, as was establishing relationships with newer platforms like Medium.

In year 2 of our strategy, we are broadening our focus to look more holistically at sharing and collaboration online. We are investigating the values and behaviors associated with successful collaboration, in the hopes that we might apply them to content platforms where CC licensing is taking place.

Creative Commons Global Summit 2017 in Toronto by Sebastiaan ter Burg / CC BY

As a first step, we asked the question — How can we help people make the human connections that make sharing meaningful? — at this year’s CC Global Summit in Toronto. (This question is also central to our 2016-2020 Organizational Strategy, where we refocused our work to build a vibrant, usable commons, powered by collaboration and gratitude.) In a session entitled, “How to encourage prosocial behavior,” our peers at Medium, Wikimedia, Thingiverse, Música Libre Uruguay, and Unsplash shared the design factors they use to incentivize prosocial behavior around CC content, particularly behavior that helps people give credit for works they use and make connections with others. Programming diversity expert Ashe Dryden shared additional insights into how current platforms often approach design from a position of privilege, unwittingly excluding marginalized groups and potential new members.

We structured the discussion into three parts:

Part 1 – The Dark Side, or defining the problem. We asked our peers to detail examples of negative behaviors around content sharing and reuse.

Part 2 – What Works, or identifying solutions. We asked for technical and social design nudges that platforms have implemented that work to increase sharing and remix. We also discussed community-driven norms and behavior “in the wild.”

Part 3 – What can we do? We wrapped by discussing what we might do together to design and cultivate online environments conducive to healthy and vibrant collaboration.

Part 1 – The Dark Side

In part 1, we heard the standard issues one might expect around CC licensing, and also negative behaviors that occur with online content sharing more generally, regardless of the © status of such content. Specific to CC were issues such as: users claiming CC0 public domain works as their own and monetizing them; misunderstanding what it means to share under a CC license vs. just posting it online; and lack of clarity on when or how a CC license applies. More generally, negative behaviors around online content sharing included harassment based on gender, ethnicity, and other identities, which discourage potential new members from joining a community.

The behavioral tendencies and tensions we surfaced that were most relevant to the question — How can we help people make the human connections that make sharing meaningful? — were:

- Users prefer real people (user identities with a history of credible content contributions) to automated accounts. CC users are smart and can quickly recognize the difference between a real user and a bot.

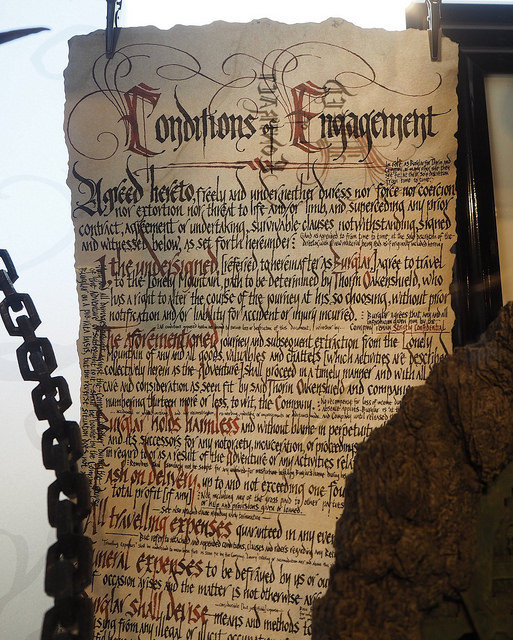

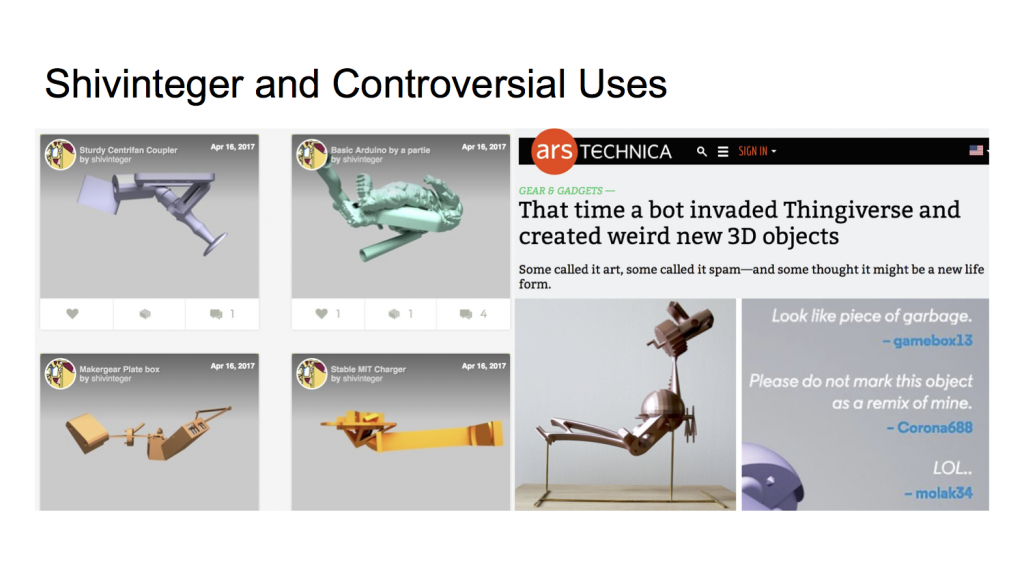

- Example: On Thingiverse, a 3D design sharing platform, a bot called Shiv Integer crawled the platform and automated bizarre remixes of users 3D designs, which were posted automatically to the site as remixes. Though the bot’s remixes respected the CC license conditions, the community still felt it was an inappropriate use of their content.

“How to encourage prosocial behavior” session slide by Makerbot’s Tony Buser

- Harassment marginalized groups face is more subtle than one might expect, and often serves to reinforce norms previously set in a space that does not account for diversity of gender, ethnicity, or geography. Overreliance on data or AI may also serve to reinforce these norms (that unintentionally discriminate) because data on which design is based is faulty or not representative of marginalized groups. Ultimately, this prevents the community from obtaining new members, particularly those from more diverse backgrounds, and from evolving into a truly open, inclusive environment inviting to “creators across sectors, disciplines, and geographies, to work together to share open content and create new works.”

- Example: 80-85% of current Wikipedia editors are males from North America and Western Europe. 38% of Wikimedia users reported some level of harassment, including stalking, doxing, attacking off the wiki on other platforms, such as Facebook, based on gender, ethnicity, and other identities.

- On platforms, users enter into legal contracts and social contracts that are separate and often in direct tension with one another. This plays out specifically in the world of copyright, where people’s desires and expectations about how content will be used are often different from the rules dictated by copyright law (which are therefore, embedded with CC licenses).

- Example: A user may post discriminatory, harassing, or violent speech on a platform under the guise of parody. As a copyright matter, parody is clearly a fair use. As an ethical matter, whether the parody constitutes harassment may not be so clear, since a parody, as a satirical imitation, essentially mocks or belittles some aspect of the original work, and possibly the author. As a result, parody can often generate confrontation and controversy in a community. With the legal and social contracts in tension, the platform must decide which to uphold for its users, with either decision potentially alienating one segment of its community. This example also raises the issue that many users expect a platform to be the enforcer of ethical norms, a position not all platforms hold.

- When different values are in tension with each other, it is hard to design community rules that can be applied both consistently and fairly. Some rules may even serve to disproportionately affect one group of people over others.

- Example: Medium has an “all-party consent” rule with respect to posting screenshots of personal 1:1 exchanges, such as text messages. This policy is designed to protect everyone’s expectation of privacy related to personal communications. It is also designed to apply to all users equally, with some exceptions for situations involving public figures or newsworthy events. In some cases, however, this policy can mean that a person with less power in an exchange is prevented from fully telling their story, since it is often when a powerful or privileged person behaves badly in private that the other person involved wants to publicly expose that behavior. As a result, this policy may allow some users to keep evidence of their private bad acts out of public exposure or scrutiny, while preventing users from a marginalized group (who may benefit from exposing the interaction or fostering public discussion of it) from being able to show the details of an exchange they think is important to show.

Part 2 – What Works, or identifying solutions.

In part 2, we learned about the different platform approaches to both incentivizing contributions of content to the commons and to incentivizing prosocial behavior around the content that made it personal and meaningful to users.

Platform approaches included:

- a prosocial frame to license selection, asking creators the kinds of uses they envision for their works, versus what license they might want to adopt

- a curated public space for licensed works, such as a radio channel, where the platform facilitates distribution to other users and potential fans

Screenshot of Radio Común by Música Libre Uruguay / CC BY-SA

- collaborative education projects facilitated by the platform, such as an FAQ about music licensing and distribution built with the community

- foregrounding the reuse and remix aspect of CC content in a variety of ways, such as: featuring remixes on the platform’s home page, displaying an attribution ancestry tree on the work’s page, sending alerts when a creator’s content is reused, and reminding users of the license on a work upon download

- remix challenges and related competitions, run by the platform to foster reuse

We also learned about approaches to disincentivize the negative behaviors and tendencies we discussed in part 1. These included:

- crowdsourced tracking of misuses and misbehaviors

- redirecting license violations to the community to handle (e.g. community forums), utilizing community pressure to behave well

- a safe space for new users to practice contributing before moving into the actual project, especially for projects with contribution rules and norms that are difficult for new members to get right the first time, e.g. Teahouse space for Wikipedia

Screenshot of Teahouse page by Wikimedia Foundation / CC BY-SA

- follow-on events or projects to retain new members after initial engagement, such as face-to-face edit-a-thons

- allowing for flexibility with regards to many community rules, noting that some rules may disproportionately affect marginalized groups

- emphasizing a personal profile or identity for each user, so that other users recognize that these contributions were made by a real human being and not an anonymous internet entity

- adding friction into certain workflows, such as at the moment of publication of a post or comment, to discourage negative behaviors and remind the user that there is a real human being at the other end of the exchange

- making community rules more visible at the appropriate steps in the sharing process, so users are more likely to respond and adhere to them

Part 3 – What can we do?

In part 3, we discussed the importance of designing for good actors. Despite the examples of negative behaviors, the platforms in the room noted that the majority of CC uses have a positive outcome , and that people in content communities are passionate about Creative Commons as a symbol of certain values, and about sharing and remixing each others works. We shared positive examples that were completely community-driven, such as unexpected reuses of a particular 3D design that led to the creation of a prosthetic hand, and a sub-community of teachers that split off to bring 3D design to students in the classroom.

More importantly, we discussed what we could do next, together. Here is the short list of ideas that emerged in the last half hour of our discussion:

- Prosocial behavior toolkit for platforms, a “prosocial” version of the CC platform integration toolkit we created in year 1 of our new strategy

- Reinvesting in the tools that make it easier to comply with CC license conditions, e.g. one-click attribution in browsers, WordPress, and other platforms

- API partnerships that bring CC media into platforms more easily, e.g. Flickr + Medium or WordPress

- Supporting the good actors and amplifying their voices

- Explore areas like reputational algorithms, possibly community-driven, to discourage bad behaviors

- Explore the role of bots in trolling and antisocial behavior, and how that affects human behavior on a platform

- And more!

What’s next

Asking the simple question — How can we help people make the human connections that make sharing meaningful? — elicited exciting discussion and surfacing of issues we had not considered before this summit session. Informed by the overwhelming response and interest from our platform partners and community, we will forge ahead with the next phase of our platform work, focusing on collaboration, in addition to discovery.

As a next step, Creative Commons is launching an investigative series into what really makes online collaboration work. What does it take to build a vibrant sharing community, powered by collaboration and gratitude? What are the design factors (both social and technical) that help people make connections and build relationships? How do we then take these factors and infuse them into communities within the digital commons?

The series will include:

- A series of face-to-face conversations on how to build prosocial online communities with CC platforms, creators, and researchers. To kick off the series, we are hosting a private event in San Francisco this June; stay tuned for public follow-on events in the series in a city near you!

- Research and storytelling of collaboration as it occurs over time through interviews and thought pieces.

- A prosocial platform toolkit that distills all of the applicable design factors into one comprehensive guide.

- Reporting of the most impactful stories in the next State of the Commons.

If any of this of interest to you, join the conversation on Slack.

If you would like to be invited to a future event, sign up for our events list.

Posted 16 June 2017