Artificial Intelligence and Creativity: Can Machines Write Like Jane Austen?

Copyright, TechnologyIn the second part of our series on artificial intelligence (AI) and creativity, we get immersed in the fascinating universe of AI in an attempt to determine whether it is capable of creating works eligible for copyright protection. Below, we present two examples of an AI system generating arguably novel content through two different methods: a Markov chain and an artificial neural network. We then apply the copyright eligibility criteria explained in “Artificial Intelligence and Creativity: Why We’re Against Copyright Protection for AI-Generated Output” to each example.

Here’s the gist: Through the Jane Austen examples below, it’s clear that the seemingly “creative” choices made by the AI system are not attributable to any causal link between a human and the result, nor is it a human that defines the final form or expression of the work. The randomness elements incorporated in an AI program is what gives the illusion of creativity—and the closer one gets to a semblance of a creative work created by a human, the higher the similarity, thus the lower the originality. All this leads us to conclude that the copyright protection requirements of authorship and originality are not satisfied.

Method 1 – Markov chain

Suppose you wanted to develop an AI system that could write like English novelist Jane Austen (1775-1817). To do this, one might model writing a sentence as a Markov chain. First discovered by Andrey Markov, a Markov chain is a stochastic model describing a sequence of events where the distribution of possibilities for the next event is dependent only on the current state of the sequence up to that point. These models were first applied to language by Claude Shannon in his groundbreaking paper, A Mathematical Theory of Communication.

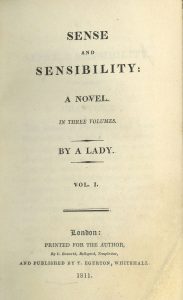

An image of the title page from the first edition of Jane Austen’s “Sense and Sensibility (1811)”. This image is in the public domain via the Lilly Library at Indiana University. Access it here.

For example, the word “Mrs.” (capitalized and with punctuation) occurs 2,157 times in the complete works of Jane Austen, and words following “Mrs.” are “Annesley,” “Gardiner,” “F.”, etc. The AI system would then randomly select from the list of words that follow “Mrs.” to get a possible continuation of a sentence starting with “Mrs.” By leaving the repeated elements in the list and selecting from it uniformly at random, a preference for selecting words that occur more frequently after the “seed” (or initial) word is ensured.

Let’s say the AI system randomly selects “Annesley” from the list to follow “Mrs.” This process can then be repeated with the list of words that follow “Annesley.” The word “Annesley” is less common (occurring only two times) and is followed by “to” and “is.” This process can be repeated multiple times to create a growing sentence stub and eventually construct something that resembles a sentence, like:

- Mrs. Annesley to it, to be mistress as the room for drawing room to be at the best known intimately judge wisely.

This “sentence” uses real words, which are chosen from Austen’s works, but doesn’t make much sense linguistically or grammatically. In order for the AI system to have more context when choosing words, a standard idea is to try to find words that follow multi-word snippets, rather than single words. In this example, you might look at the list of words that follow the two-word snippet “Mrs. Annesley,” which include “to” and “is.” Note: These are the same words that follow the one-word snippet, “Annesley.”

If you randomly select “to” to follow “Mrs. Annesley,” then you have to find the list of words following the snippet “Annesley to,” and so on. Continuing in this manner, you could construct a sentence like:

- Annesley to Miss Thorpe, and then only think! I found that I am sure you would think tall, and on this second interview, such she did not doubt your inclination.

Although this sentence is fragmented, it makes more sense than the previous sentence constructed from one-word snippets. Now let’s look at an example constructed using three-word snippets, starting with “Mrs. Annesley to”:

- Mrs. Annesley to Miss Darcy had been standing near enough for her to refuse the correspondence; it was impossible for me not to hear it.

At this level of context, we’re starting to see correct grammar. The sentence almost seems like it could have been written by Austen herself (on a bad day). However, this sentence is completely machine-generated. The longest snippet of words in this sentence that also appear consecutively in Austen’s works is:

- …for her to refuse the correspondence; it was impossible for…

However, the context around that snippet is different from Austen’s original work and is actually composed of different sections from several of her works. Here’s an example using four-word snippets, starting with “Mrs. Annesley to Miss”:

- Mrs. Annesley to Miss Darcy had been given, to remind her of what anxiety was; but when she saw, on her frequent and minute examination, that every symptom of recovery continued, and saw Marianne at six o’clock sink into a quiet, steady, and to all appearance comfortable, sleep, she silenced every doubt.

In this case, the method is beginning to lose originality. In fact, this sentence is composed of two snippets directly from Austen’s original works:

- Mrs. Annesley to Miss Darcy had been given, to remind her of…

- …to remind her of what anxiety was; but when she saw, on her frequent and minute examination, that every symptom of recovery continued, and saw Marianne at six o’clock sink into a quiet, steady, and to all appearance comfortable, sleep, she silenced every doubt.

These snippets are stitched together at “to remind her of.” The first snippet is from Austen’s novel Pride and Prejudice and the second is from Sense and Sensibility.

From these examples, it’s clear that expanding the “context” (i.e., the snippet length) increases the probability that the AI system will produce something akin to proper English, but it also decreases the originality of the output. To increase originality, the system requires more text from the original author’s works to be given as input. Even with this simple method, a system can produce fairly realistic English prose. In fact, the actual limit on the quality of content generated by this method turns out to be processing power, computation time, and storage. Also, since the goal is to generate prose only in the style of Jane Austen, the set of possible input text is limited to her works.

The Markov chain described above is just one example of a more general concept called a language model. In technical terms, language models are probability distributions over sequences of words in a language. In our case, we are interested in the probability that a word will occur as the next word, given a sequence of words up to some point. In this model, selecting at random from the probability distribution of possible words following the sequence up to the current point allows us to generate “prose.” As of this writing, one of the most recent large language models is called GPT-3, and was produced by an organization called OpenAI.

Method 2 – Artificial neural network

GPT-3 is a considerably more sophisticated model than the Markov chain. In fact, it’s an example of an artificial neural network (ANN). An ANN model is quite complicated but it’s basically a computational model based on the neural networks of the human brain.1 Just as our brains are composed of interconnected processing elements (i.e. neurons) to process information, this artificial system also consists of a neural network that works together to solve a specific problem. Further, just as humans learn when given more information and subsequently change their actions to solve a problem, this artificial system also learns based on its inputs and outputs.

For example, to train an ANN model to predict the next word in a sequence, we make many predictions from different snippets of text per second and use a mathematical process to adjust the ANN model after each incorrect prediction. The adjustments are in the form of slightly changing the values of different numerical parameters in the model. Because the same parameters are used for each snippet, we need many of them to make a general enough model so that we can make predictions based on any arbitrary input sequence. (The large version of GPT-3 has around 175,000,000,000 parameters!) After several iterations of the process above to improve the model, we can generate new text by feeding the model existing text, appending whatever word it predicts next, and finally feeding the result back into the model. In reality, this process is a bit more complicated than described above but the general idea is that it allows us to generate a novel output on each run, rather than the same thing over and over.

Unfortunately, Brent (CC’s data engineer) couldn’t run the large model on his laptop, so he settled for using GPT-3’s predecessor GPT-2, which only has 117,000,000 parameters. The model comes “trained” out of the box, meaning it has already gone through many iterations of the process described above on English text. A user can “fine-tune” the model by performing further iterations on a sample of the English text of their choosing. Here is an example of the output after training the model for around 10 minutes on Jane Austen’s work:

- “Yes, I suppose,” replied Emma, “but I do not think she does a great deal of good, of course, I dare say; but if she could, she might, I must say, but she is a great lady at heart, I do not know whether we know that Mr. Elliot is his kindest sister.”

Note that while it’s not making much sense as a story, there are no real grammatical mistakes, and the “voice” does seem to closely echo Jane Austen’s. In general, every AI method for generating novel content, written or otherwise, involves developing a (potentially quite sophisticated) mathematical model that emulates some intelligent behavior. Then, content can be generated by selecting randomly from a probability space defined by that model.

Applying copyright theories to our AI-generated Jane Austen sentences

A remix of the 1870 engraving of Jane Austen in the public domain via the University of Texas and the collage titled “Brain Scandal” by Kollage Kid licensed CC BY-NC-SA.

On a theoretical level, ideas regarding “authorship” and “originality” as we examined them in the first post of this series appear to be at odds with any conception of AI (i.e. non-human) creativity. As we’ve seen in our Jane Austen example, the seemingly “creative” choices made by the AI system are not attributable to any causal link between a human and the result, nor is it a human that defines the final form or expression of the work. Where humans (such as AI programmers or users) are indeed involved in the creation of AI-generated output in the models described above, this involvement is solely mechanical, and not authorial or creative. The randomness elements incorporated in an AI program is what gives the illusion of creativity—and the closer one gets to a semblance of a creative work created by a human, the higher the similarity, thus the lower the originality. All this leads us to conclude that the copyright protection requirements of authorship and originality are not satisfied.

All said, as much as AI has advanced in the past few years, there exists no clarity, let alone consensus, over how to define the nascent and uncharted field of AI technology. Any attempt at regulation is premature, especially through an already over-taxed copyright system that has been commandeered for purposes that extend well beyond its original intended purposes. AI needs to be properly explored and understood before copyright or any intellectual property issues can be properly considered. That’s why AI-generated outputs should be in the public domain, at least pending a clearer understanding of this evolving technology.

Notes

1. In more technical terms, an ANN can be defined as a class of functions that take vectors in from some vector space and map those vectors to a different vector space. Transitions between functions within the class are defined via an operator which is itself a mathematical function. The operator is designed to “train” the ANN model by minimizing some cost function associated with the output.

Posted 10 August 2020