Help us protect the commons. Make a tax deductible gift to fund our work in 2025. Donate today!

Experts Weigh In: AI Inputs, AI Outputs and the Public Commons

Better Internet

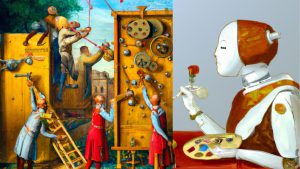

“AI Inputs and Outputs” by Creative Commons was made from details from two images generated by the DALL-E 2 AI platform with the text prompts “A Hieronymus Bosch triptych showing inputs to artificial intelligence as a Rube Goldberg machine; oil painting” and “a robot painting its own self portrait in the style of Artemisia Gentileschi.” CC dedicates any rights it holds to the image to the public domain via CC0.

On 9 and 10 November 2022, Creative Commons hosted a pair of webinars on artificial intelligence (AI). We assembled two panels of experts at the intersection of AI, art, data, and intellectual property law to look at issues related to AI inputs — works used in training and supplying AI — and another focused on how open works and better sharing intersect with AI outputs — works generated by AI that are, could be, or should be participating in the open commons.

In both webinars, we were looking to explore how the proliferation of AI connects to better sharing: sharing that is inclusive, just and equitable — where everyone has wide opportunity to access content, to contribute their own creativity, and to receive recognition and rewards for their contributions? And how the proliferation of AI connects to a better internet: a public interest vision for an internet that benefits us all?

AI Inputs

Our first panel, AI Inputs and the Public Commons, looked at AI training data. The panel for this discussion included Abeba Birhane, the Senior Fellow in Trustworthy AI at the Mozilla Foundation; Alek Tarkowski, the Co-Founder and Director of Strategy for the Open Future Foundation; Anna Bethke, the Principal Ethical AI Data Scientist for Salesforce; and Florence Chee, Associate Professor in the School of Communication and Director of Center for Digital Ethics and Policy at Loyola University in Chicago. Stephen Wolfson, Associate Director for Research and Copyright Services, School of Law, University of Georgia, moderated the panel.

AI Inputs focused on the potential harms that can come from AI systems when they are trained on problematic data. AI models require massive amounts of training data to function, and in the past, developers had to hand curate AI datasets. Today, however, large scale datasets are widely available thanks to widely available internet content, and these datasets have enabled the existence of incredibly powerful AI models like never before.

But along with the availability of these massive datasets, concerns have arisen over the content they contain and how they are being used. Abeba Birhane, who audits large datasets as part of her research, has found illegal, racist, and/or unethical content in datasets that are used to train common AI models. Using this data in turn embeds biases into AI models, which tends to harm marginalized groups disproportionately. Even if the content contained within these datasets is not illegal, it may have been obtained without affirmative consent from the creators or subjects of those data. People are also rarely able to withdraw their data from these datasets. Indeed, because the datasets contain millions or even billions of data points, it may be practically impossible to remove pieces of content from them.

At the same time, obtaining individualized consent from everyone who has data in one of these datasets would be incredibly difficult, if not impossible. Moreover, requiring consent would likely greatly constrain development of AI because it would be so hard to get consent to use every element in the large datasets that have increased innovation in AI.

Unfortunately, there is no clear solution to the problems associated with AI inputs. Our panel agreed that some legal regulation over AI training data can be useful to improve the quality and ethics of AI inputs. But regulation alone is unlikely to solve the many problems that arise. We also need guidelines for researchers who are using data, including openly licensed data, for AI training purposes. For instance, guidelines may discourage the use of openly licensed content as AI inputs where such a use could lead to problematic outcomes, even if the use does not violate the license, such as with facial recognition technology. Public discussions like this are essential to developing a consensus among stakeholders about how to use AI inputs ethically, to raise awareness of these issues, and try to improve AI models going forward.

Links shared by panelists and participants

- Recommendation on the Ethics of Artificial Intelligence

- The New Legal Landscape for Text Mining and Machine Learning by Matthew Sag

- OSI’s Deep Dive is an essential discussion on the future of AI and open source

- Podcast Archive – Deep Dive: AI

AI Outputs

We continued our conversation about AI the following day with our second panel, AI Outputs and the Public Commons. For this panel, we brought together a group of experts that included artists, AI researchers, and intellectual property and communications scholars to discuss generative AI. This panel included Andreas Guadamuz, a reader in intellectual property law at the University of Sussex; Daniel Ambrosi, an artist who uses AI technology to help him produce original, creative works; Mark Riedl, a professor at the Georgia Institute of Technology School of Interactive computing; and Meera Nair, a copyright specialist at the Northern Alberta Institute of Technology. Kat Walsh, General Counsel for Creative Commons, moderated the panel.

While our second panel touched on many topics, the issue that ran through most of the conversation on AI outputs was whether works produced by artificial intelligence are somehow different from works produced by humans. Right away, the panel questioned whether this distinction between human-created works and AI-generated content makes sense. Generative AI tools exist along a spectrum from those that require the least amount of human interaction required to to those that require the most, and, at least with modern AI systems, humans are involved at every step of AI content creation. Humans both develop the AI models as well as use the systems to produce content.

Since truly autonomously generated works do not exist at present — that is, art produced entirely by AI without any human intervention at all — our panelists suggested that perhaps it is better to think about AI systems as a type of art tool that artists can use, rather than something entirely new. From the paintbrush, to the camera, to generative AI, humans have used technology to produce art throughout history, and these technologies always raise questions about the nature of art and creativity.

Still, there are some important differences between AI and human creations. For example, AI systems can create new works at a scale and speed that humans cannot match. AI is not bound by human limitations when creating content. AI doesn’t need to sleep, eat, or do the other things that slow down human artistic creation. AI can produce all the time, without distraction or pause.

Because of the possibility for the production of vast amounts of AI-generated content, our panelists discussed whether AI-generated content belongs in the public domain or whether it should receive copyright protection. Andres Gaudamuz raised an interesting point that is front and center for us at Creative Commons — should AI-generated works be in the public domain by default or is copyright protection, in fact, a better option. He encouraged the community to consider whether it is desirable to put AI-generated content in the public domain, if that could result in harm to human artists or chill human creativity. If copyright exists to encourage the creation of new works, could a public domain that is filled with AI-generated content discourage human creation by making too much art available for free? Would an abundance of AI-generated content put human artists out of work?

At the same time, the panel also recognized that the public domain is necessary for the creation of art. Artists, human and AI alike, do not create in a vacuum. Instead, they build upon what has come before them to produce new works. AI systems create by mixing and matching parts they learn from their training data; similarly, humans experience art and use what has come before them to produce their own works.

CC has addressed AI outputs and creativity a few times in the past. In general, we believe that AI-generated content should not qualify for copyright protection without direct and significant human input. We have been skeptical that AI creations should be considered “creative” in the same way that human works are. And, importantly, we believe that human creativity is fundamental to copyright protection. As such, copyright is incompatible with AI outputs generated by AI alone.

Ultimately, like our panel on AI inputs, this panel on AI outputs could not, and was not designed to solve the many issues our panelists discussed. With AI becoming an increasingly integral part of our lives, conversations like these are essential to figuring out how we can safeguard against harms produced by AI, promote creativity, and ultimately use AI to benefit us all. Creative Commons plans to be in the middle of the conversation about the intersection of AI policy and intellectual property rights. In the upcoming months, we will continue these discussions through an on-going series of conversations with experts as we try to better understand how we should make sense of AI policy and IP rights.

Links shared by panelists and participants

- The Runaway Species: How Human Creativity Remakes the World: Eagleman, David, Brandt, Anthony

- Dreamscapes – Daniel Ambrosi

- Neural Style Transfer: Using Deep Learning to Generate Art

- Artificially intelligent painters invent new styles of art | New Scientist

- An interview with David Holz, CEO of AI image-generator Midjourney: it’s ‘an engine for the imagination’ – The Verge

- Fine Art and the Unseen Hand. Reconsidering the role of technology in… | by Daniel Ambrosi | Medium

- @FairDuty Tweet: CIPO appears to have ignored the requirement of originality in terms of what qualifies for (c) protection — an exercise of skill and judgement that is more than trivial – so said by our Supreme Court in 2004 (CCH). Fascinating thread of discussion about AI output and copyright.

- List of tools for creating prompts for text-to-image AI generators

- Ed Sheeran Awarded Over $1.1 Million in Legal Fees in ‘Shape of You’ Copyright Case

- Selling Wine Without Bottles: The Economy of Mind on the Global Net | Electronic Frontier Foundation

- Caspar David Friedrich

- You Can’t Copyright Style — THE [LEGAL] ARTIST

- Part 1: Is it copyright infringement or not? – Arts Law Centre of Australia

- This artist is dominating AI-generated art. And he’s not happy about it. | MIT Technology Review

- The Hard Drive With 68 Billion Melodies